Using ChatGPT to automatically add annotations to your Omnivore saved articles [Guest Post]

Today’s article is a guest post from our community member and developer Jan Beck about his project using ChatGPT to add annotations automatically in Omnivore.

With the launch of OpenAI’s generative language model, ChatGPT, the possibility of using it to interact with your notes, documents, and searches, became available. We often have discussions within our community about how we can use generative AIs, in a helpful and secure way.

We asked Jan Beck, a developer and an Omnivore community member, about his side project using ChatGPT to add annotations automatically to saved items in Omnivore. Jan Beck is a passionate solopreneur and freelance web developer with 15+ years of experience in building user-friendly products and services for the web. His latest project is WealthAssistant.app, a GPT-4-powered financial simulator built with privacy in mind to help anyone plan and realize their financial life goals.

Here’s what Jan told us.

What you need to start

His solution is a serverless function that uses Omnivore’s API, Omnivore’s webhooks, and OpenAI’s chat completions API. It can be used to automatically add annotations when a specific label is added to items saved in Omnivore. For example, you can use the summarize tag to get the key insights from an article. The repository can be deployed using Vercel. It could work with other providers but hasn’t yet been tested with them.

Jan recorded a short demo video that walks us through the setup:

A few things to keep in mind

We discussed with Jan and clarified a few pieces of information, to be sure everyone knows all the details.

Bugs and technical support

The solution is not complicated to set up, but it may not be particularly user-friendly for those who aren't familiar with development. Jan, understandably, can’t offer support beyond bug fixes: “ I’d like to manage expectations from the start and also say I can't offer support beyond bug fixes if people have issues working with it. Vercel, OpenAI, and Omnivore all offer excellent documentation, however.”

Costs

It was mentioned in the video that everything is free, but there are a few expenses regarding OpenAI and Vercel plans. OpenAI pricing is based on tokens, and it can fluctuate depending on the LLM. Jan tested the system using GPT-3.5: “I tested this in the last few days, made about 100 API requests that used 450K tokens and it cost me $1.49. Still, I’d add a disclaimer here and encourage people to use this sparingly in the beginning to understand how much usage is going to cost.

By default, the GPT-3.5 Turbo model with a 16K token window is used. This can be changed to the more costly but smarter GPT-4 Turbo, with 128K tokens, via environment variables. For reference, this article used about 4K tokens to generate a one-paragraph summary, so the default model could handle an article 4 times larger.

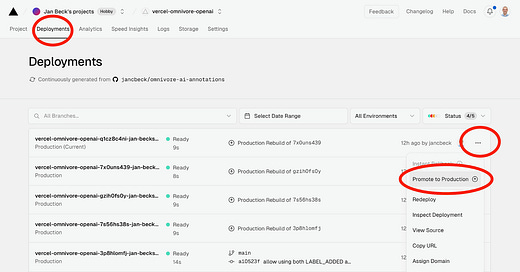

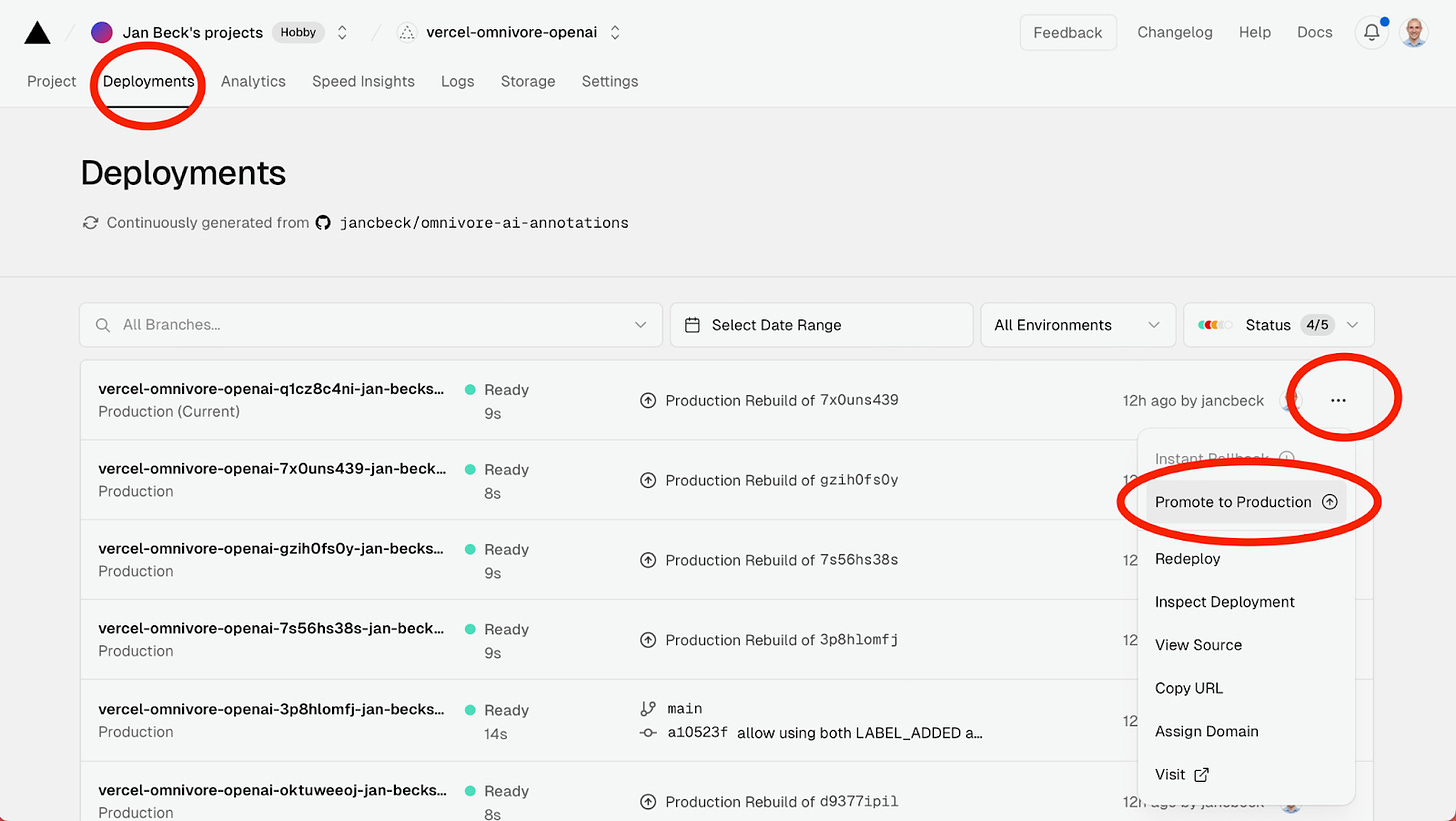

Speaking of environment variables, an extra step is needed every time you change those. You will need to promote the last deployment to production (see screenshot). So, better to set those environment variables right at the initial setup of the project to avoid jumping through that hoop every time.”

Vercel offers a free plan, called Hobby, which supports 35 frameworks, Edge Network, Automatic CI/CD (Git Integration), and Functions (Serverless, Edge). It should be enough for every user.

Jan’s testing used about 164 execution units out of the 500,000 quota and the function uses 0.04 /128 MB memory. “Edge functions must return a response after 25 seconds which could be an issue for very long AI generations and when OpenAI is slow. For 16K token models, this should not be an issue. Worst case, the function is stopped and the user receives an email about it.”

Viewing the article notes

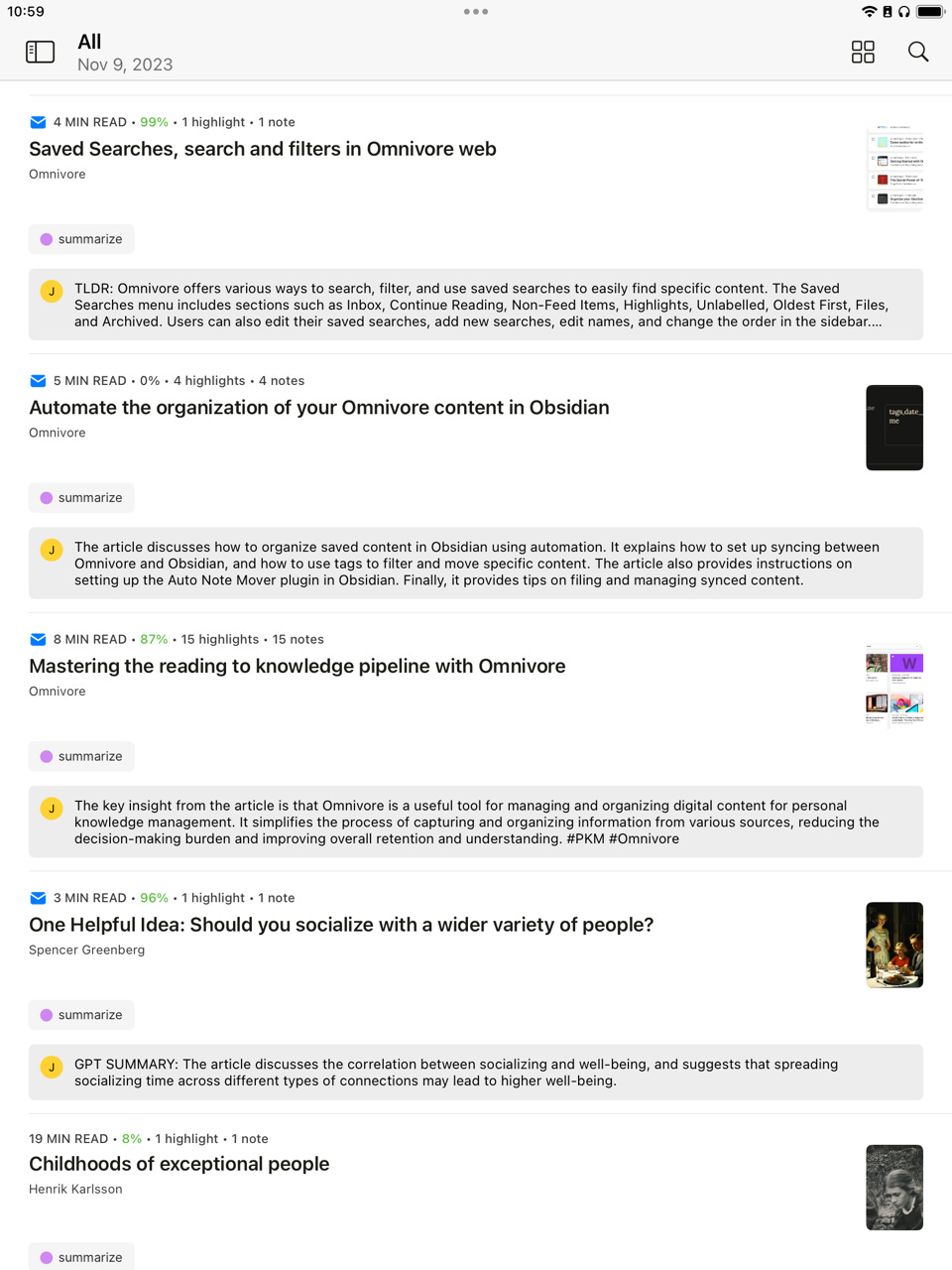

If you are using the iPad app, in the list view, Omnivore shows the article notes under each article.

Conclusion

There are multiple possibilities for integrating AI, and Jan says this can be considered a “proof-of-concept for a native integration of AI into Omnivore. For example, you could highlight an article passage and write fact check this or explain and that could trigger GPT. Or give GPT access to tools and render commands to it such as highlighting every person that’s mentioned in the article and adding a note of their bio. The whole UI could eventually become conversational and become the main way to interact with text.”

Thanks, Jan, for the development work and for sharing it with us. We always love to see how the community is expanding the use of Omnivore. If any of you have other tools or workflows, let us know!

Editing and proofreading by Steen Comer.

Very nice tutorial. Is it possible to use Perplexity the same way to get article summaries? Thanks. - Peter

Hi @frangrillo, I finally got it and would love the share with you.

The secret is, you need to setup a rule first before you can use weekhok.

1. go to /settings/rules

2. create a new rule, give it a name , filter in:all , I don't need ai summary for all my post so i choose the adding summarize tag route. So the trigger is 'LABEL_ATTACHED"

3. Finally , finally , you can set your webhook link in the action field.

I've been able to run it. I've even modified my prompt a bit to suit my needs and use ChatGpt 4o mini model to save a bit more money.

Everything seems working now but the note don't show up under my post :-(

Hope this help, cheers!